Macedonian LLM Eval

A benchmark for evaluating the capabilities of LLMs in Macedonian.

📋 Overview

The Macedonian LLM Eval is a benchmark designed to quantiatively measure how well large language models perform in the Macedonian language.

This benchmark covers tasks like reasoning, world knowledge, and reading comprehension to provide a complete assessment. It helps researchers and developers compare models, identify strengths and weaknesses, and improve Macedonian-specific AI tools.

You can find the Macedonian LLM eval dataset on HuggingFace. The dataset was translated from Serbian to Macedonian using the Google Translate API. The Serbian dataset was selected as the source instead of English because Serbian and Macedonian are closer from a linguistic standpoint, making Serbian a better starting point for translation. Additionally, the Serbian dataset was refined using GPT-4, which, according to the original report, significantly improved the quality of the translation.

🎯 What is currently covered:

- Common sense reasoning:

Hellaswag,Winogrande,PIQA,OpenbookQA,ARC-Easy,ARC-Challenge - World knowledge:

NaturalQuestions - Reading comprehension:

BoolQ

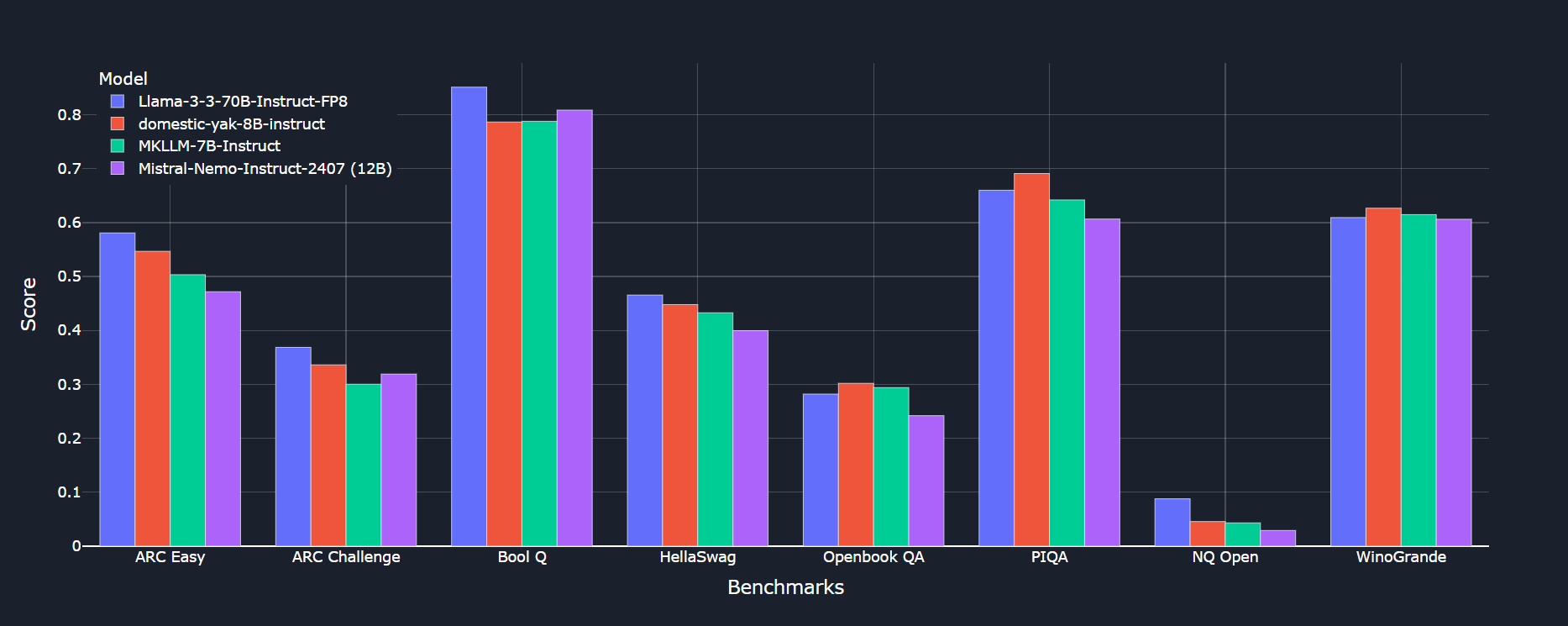

📊 Latest Results - January 16, 2025

| Model | Version | ARC Easy | ARC Challenge | Bool Q | HellaSwag | Openbook QA | PIQA | NQ Open | WinoGrande |

|---|---|---|---|---|---|---|---|---|---|

| MKLLM-7B-Instruct | 7B | 0.5034 | 0.3003 | 0.7878 | 0.4328 | 0.2940 | 0.6420 | 0.0432 | 0.6148 |

| BLOOM | 7B | 0.2774 | 0.1800 | 0.5028 | 0.2664 | 0.1580 | 0.5316 | 0 | 0.4964 |

| Phi-3.5-mini | 3.8B | 0.2887 | 0.1877 | 0.6028 | 0.2634 | 0.1640 | 0.5256 | 0.0025 | 0.5193 |

| Mistral | 7B | 0.4625 | 0.2867 | 0.7593 | 0.3722 | 0.2180 | 0.5783 | 0.0241 | 0.5612 |

| Mistral-Nemo | 12B | 0.4718 | 0.3191 | 0.8086 | 0.3997 | 0.2420 | 0.6066 | 0.0291 | 0.6062 |

| Qwen2.5 | 7B | 0.3906 | 0.2534 | 0.7789 | 0.3390 | 0.2160 | 0.5598 | 0.0042 | 0.5351 |

| LLaMA 3.1 | 8B | 0.4453 | 0.2824 | 0.7639 | 0.3740 | 0.2520 | 0.5865 | 0.0335 | 0.5683 |

| LLaMA 3.2 | 3B | 0.3224 | 0.2329 | 0.6624 | 0.2976 | 0.2060 | 0.5462 | 0.0044 | 0.5059 |

| 🏆LLaMA 3.3 - 8bit | 70B | 0.5808 | 0.3686 | 0.8511 | 0.4656 | 0.2820 | 0.6600 | 0.0878 | 0.6093 |

| domestic-yak-instruct | 8B | 0.5467 | 0.3362 | 0.7865 | 0.4480 | 0.3020 | 0.6910 | 0.0457 | 0.6267 |

See our GitHub for more details.